What it is, why it matters, how to measure it, and how to improve your cache hit rate.

1. Prompt Caching Basics

Model prompts often include repeated content — such as system instructions and shared context. When a request contains a prefix the system has recently processed, OpenAI can route it to a server that already computed that prefix, allowing the model to reuse prior work instead of recomputing it from scratch. Prompt Caching can reduce time-to-first-token latency by up to 80% and input token costs by up to 90%. It works automatically on all API requests and has no additional fees. The goal of this cookbook is to go deeper on optimizing for cache hits. Review our API docs and Prompt Caching 101 for a great overview - let’s dive in!

1.1 Basics

- Cache hits require an exact, repeated prefix match and works automatically for prompts that are 1024 tokens or longer. You can get a match until the first mismatched token in 128 token blocks.

- The entire request prefix is cacheable: messages, images, audio, tool definitions, and structured output schemas.

- Cache hits come when requests route to the same server. Take advantage of the

prompt_cache_keyfor traffic that shares common prefixes to improve that routing. - Carefully consider the impact of caching from context engineering including compaction and summarization.

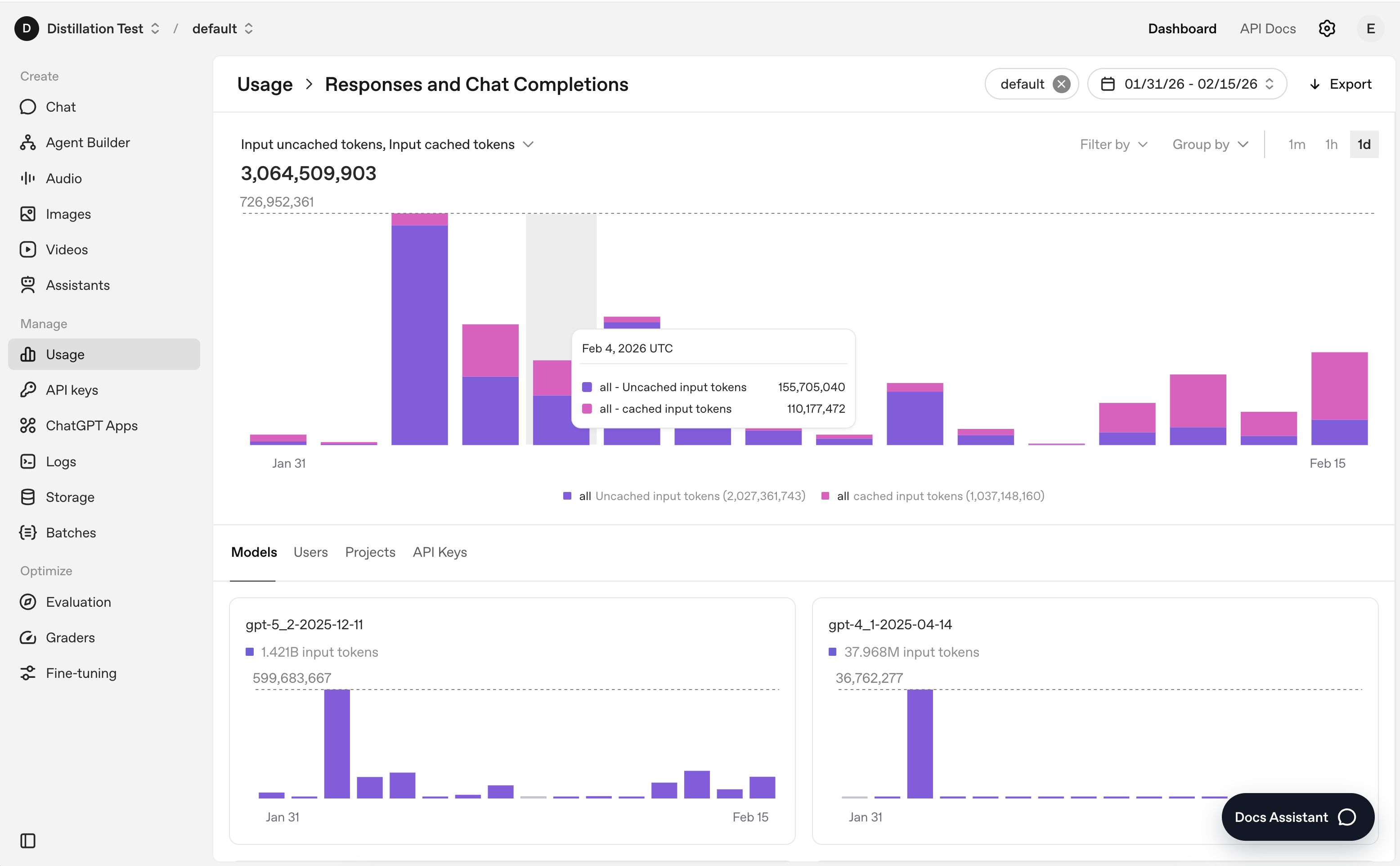

- Monitor caching, cost and latency via request logs or the Usage dashboard while iterating.

2. Why caching matters

2.1 Core Technical Reason: Skipping Prefill Compute

The forward pass through transformer layers for input tokens is a dominant part of inference work. If you can reuse per-layer key/value tensors (the KV cache), you avoid recomputing those layers for cached tokens and only pay the lookup plus new-token compute.

2.2 Cost Impact

Cache discounts can be significant. Discount magnitude varies by model family - our newest models have been able to offer steeper cache discounts (90%) as our inference stack has become more efficient. Here are some examples, but see our pricing page for all models. Prompt Caching is enabled for all recent models, gpt-4o and newer.

| Model | Input (per 1M tokens) | Cached input (per 1M tokens) | Caching Discount |

|---|---|---|---|

| GPT-4o | $2.50 | $1.25 | 50.00% |

| gpt-4.1 | $2.00 | $0.50 | 75.00% |

| gpt-5-nano | $0.05 | $0.005 | 90.00% |

| gpt-5.2 | $1.750 | $0.175 | 90.00% |

| gpt-realtime (audio) | $32.00 | $0.40 | 98.75% |

2.3 Latency Impact

Reducing time-to-first-token (TTFT) is a major motivation for improving cache rates. Cached tokens can reduce latency by up to ~80%. Caching keeps latency roughly proportional to generated output length rather than total conversation length because the full historical context is not re-prefilled. I ran a series of prompts 2300 times and plotted the cached vs uncached requests. For the shortest prompts (1024 tokens), cached requests are 7% faster, but at the longer end (150k+ tokens) we’re seeing 67% faster TTFT. The longer the input, the bigger the impact of caching on that first-token latency.

3. Measure caching first (so you can iterate)

3.1 Per-request: cached_tokens

Responses include usage fields indicating how many prompt tokens were served from cache. All requests will display a cached_tokens field of the usage.prompt_tokens_details Response or Chat object indicating how many of the prompt tokens were a cache hit.

"usage": {

"prompt_tokens": 2006,

"completion_tokens": 300,

"total_tokens": 2306,

"prompt_tokens_details": {

"cached_tokens": 1920

},

"completion_tokens_details": {

"reasoning_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

}

}You can also get a high level overview by filtering selected cached / uncached tokens for the measures in the usage dashboard

4. Improve cache hit rate (tactical playbook)

What’s a cache hit? That’s when a request starts with the same prefix as a previous request, allowing the system to reuse previously computed key/value tensors instead of recomputing them. This reduces both time-to-first-token latency and input token cost and maximizing this is our goal!

Tangible Example: Multi-Turn Chat In a multi-turn chat, every request resends the full conversation. If your prefix (instructions + tools + prior turns) stays stable, all of that gets served from cache and it’s only the newest user message at the end that’s unseen tokens. In usage, you should see

cached_tokens≈ total prompt tokens minus the latest turn.

4.1 Send a Prompt over 1024 tokens

It may seem counterintuitive but you will save money by lengthening your prompt beyond ~1024 tokens to trigger caching. Say you have a 900 token prompt - you’ll never get a cache hit. If you lengthen your prompt to 1100 tokens and get a 50% cache rate you’ll save 33% on the token costs. If you get a 70% cache rate, you’d save 55%.

4.2 Stabilize the Prefix

This is the lowest hanging fruit. Make the early portion of the request as stable as possible:

- Instructions

- Tool definitions

- Schemas

- Examples

- Long reference context Move volatile content (e.g. user text, new content) to the end and ensure you aren’t accidentally breaking the cache by including something dynamic.

Tip: Use metadata We’ve seen customers accidentally invalidate their cache by including a timestamp early in their request for later lookup/debugging. Move that to metadata where it will not impact the cache!

4.3 Keep Tools and Schemas Identical

Tools, schemas, and their ordering contribute to the cached prefix - they get injected before developer instructions which means that changing them would invalidate the cache. This includes:

- Schema key changes

- Tool ordering changes

- Changes to instructions

Tip: Adjust tools without breaking prompt caching Leverage

allowed_toolstool_choiceoption that lets users restrict the tools the model can call for a request without changing the tools array and busting the cache. List your full toolkit in tools, and then use anallowed_toolsblock to specify which tool can be used on a single turn.

# Static “tools” stay in the cached prompt prefix:

tools = [get_weather_def, get_location_def, calendar_def, …]

# Per-call “allowed_tools” only lives in request metadata, not in the prefix:

allowed_tools = {"mode":"auto", "tools":["get_weather","get_location"]}4.4 Use prompt_cache_key to Improve Routing Stickiness

prompt_cache_key is a parameter that we combine with the initial 256 token hash to help requests route to the same engine, increasing the chance that identical prefixes land on the same machine. It’s effective - one of our coding customers saw an improved hit rate from 60% to 87% when they started using prompt_cache_key. prompt_cache_key is especially useful when you are sending different requests that have the same initial set of context but then vary later, this parameter helps the system distinguish between requests that look the same at the start and you’ll get more effective cache hits.

Nodes can only handle about 15 requests per minute so requests over that rate for the same prefix and prompt_cache_key may spill over to additional machines (reducing cache effectiveness).

Real Use Cases Choose granularity to keep each prefix + key combination below ~15 requests per minute. You may wonder whether to associate a key with a user or a conversation. Your traffic patterns should inform your choice. For coding use cases we’ve seen:

- Per-user keys improve reuse across related codebase conversations

- Per-conversation keys scale better when users run many unrelated threads in parallel. Grouping several users to share a key can also be a good approach. A simple algorithm for this would be to hash and mod a user id by number of “buckets” to aim close to 15RPM to aim for to maximize caching performance.

Tip: Test Flex Processing instead of the Batch API If you have latency insensitive workflow you may be using the Batch API. For more flexibility around caching, consider using Flex Processing - it’s the same 50% discount on tokens but on a per-request basis so you can control the RPM, use extended prompt caching and include a

prompt_cache_keywith the request, leading to higher cache hit rates. Flex is best when prototyping or for non-inference intensive prod workloads.

Through testing the same repeat prompt across Flex and Batch, I saw an 8.5% increase in cache rates when I used Flex with extended prompt caching and a prompt_cache_key across 10,000 requests. That cache improvement means a 23% decrease in input token cost.

There isn’t model parity for caching - the Batch API does not support caching for pre-GPT-5 models, so if you’re using o3 or o4-mini, you should consider switching to Flex to take advantage of caching. Check the most up to date info on this in our pricing docs.

Insight:

prompt_cache_keyas shard key It might be helpful to think of theprompt_cache_keyas a database shard key when thinking about how we route the request on the backend - the considerations are very similar when optimizing for the parameter. Like shard keys, granularity is a balancing act. Each machine can only handle about ~15 RPM for a given prefix, so if you use the same key on too many requests, requests will overflow to multiple machines. For each new machine, you’ll start the cache anew. On the other hand, if your key is too narrow, traffic spreads out across machines and you lose the benefit of cache reuse. Routing is still load-balanced -prompt_cache_keyincreases the chance similar prompts hit the same server but does not guarantee stickiness - caching is always best-effort!

4.5 Use the Responses API instead of Chat Completions

As we outlined in Why we built the Responses API, our internal benchmarks show a 40-80% better cache utilization on requests when compared to Chat Completions.

This is because the raw chain of thought tokens get persisted in the Responses API between turns via previous_response_id (or encrypted reasoning items if you’re stateless). Chat Completions does not offer a way to persist these tokens. Better performance from reasoning models using the Responses API is an excellent guide to understanding this in more depth.

4.6 Be thoughtful about Context Engineering

At its core, context engineering is about deciding what goes into the model’s input on each request. Every model has a fixed context window, but curating what you pass on each request isn’t just about staying under the limit. As the input grows, you’re not only approaching truncation - you’re also increasing replay cost and asking the model to distribute its attention across more tokens. Effective context engineering is the practice of managing that constraint intentionally. However, when you drop, summarize or compact earlier turns in a conversation, you’ll break the cache. With the rise of long-running agents and native compaction, it’s important to keep caching in mind when architecting for context engineering to ensure the right balance of cost versus intelligence savings.

response = client.responses.create(

model="gpt-5.2-codex",

input=conversation,

store=False,

context_management=[{"type": "compaction", "compact_threshold": 100000}],

)A common failure mode is an overgrown tool set that introduces ambiguity about which tool should be invoked. Keeping the tool surface minimal improves decision quality and long-term context. When curating tools on a per-request basis, use tip 4.3 and leverage the allowed_tools tool_choice option for pruning.

Practical rule Leverage your evals to choose the compaction method and frequency that balances cost (both from reducing total input tokens via truncation/summarization as well as caching) and intelligence gained from careful context engineering

5. Troubleshooting: why you might see lower caching:

Common causes:

- Tool or response format schema changes

- Context Management/Summarization/Truncation

- Changes to instructions or system prompts

- Changes to reasoning effort - that parameter is passed in the system prompt

- Cache Expiration: too much time passes and the saved prefix is dropped.

- Using Chat Completions with reasoning. Chat Completions drops reasoning tokens = lower cache hits.

6. Extended Prompt Caching & Zero Data Retention

Caches generally last 5-10 minutes of inactivity up to one hour but enabling extended retention allows for caches to last for up to 24 hours.

Extended Prompt Caching works by offloading the key/value tensors to GPU-local storage when memory is full, significantly increasing the storage capacity available for caching. Because the cache is stored for longer, this can increase your cache hit rate. Internally we have seen improvements of 10% for some use cases.

If you don’t specify a retention policy, the default is in_memory.

{

"model": "gpt-5.1",

"input": "Write me a haiku about froge...",

"prompt_cache_retention": "24h"

}KV tensors are the intermediate representation from the model’s attention layers produced during prefill. Only the key/value tensors may be persisted in local storage; the original customer content, such as prompt text, is only retained in memory. Prompt caching can comply with Zero Data Retention because cached prompts are not logged, not saved to disk, and exist in memory only as needed. However, extended prompt caching requires storing key/value tensors to GPU-local storage as application state. This storage requirement means that requests leveraging extended prompt caching are not ZDR eligible - however if a ZDR customer explicitly asks in their request for Extended Prompt Caching the system will honor that. ZDR customers should be cautious about this and consider policies to monitor their requests for this parameter.

Insight: What Is Actually Cached? The KV cache just holds the model’s key/value tensors (linear projections of the hidden‑states) so we can reuse them on the next inference step. The KV cache is an intermediate representation. It’s essentially just a bunch of numbers internal to the model - that means no raw text/multi-modal inputs are ever stored, regardless of retention policy.

7. Realtime API

Caching in the Realtime API works similarly to the Responses API: as long as the prefix stays stable, cache hits are preserved. But when the context window is exceeded and the start of the conversation shifts, cache hits drop. Given that the context window of the Realtime API is much shorter than our frontier models (currently 32k tokens), this is even more relevant.

7.1 Retention Ratio

By default (truncation: “auto”), the server removes just enough old messages to fit within the context window. This “just-in-time” pruning shifts the start of the conversation slightly on every turn once you’re over the limit. However, this naive strategy causes frequent cache misses.

The retention_ratio setting changes this by letting you control how much of the earlier context to keep vs. drop. It’s a configurable truncation strategy that lets developers control how much of the token context window is retained, balancing context preservation with cache-hit optimization.

In this example, retention_ratio: 0.7 means that when truncation happens, the system keeps roughly 70% of the existing conversation window and drops the oldest ~30%. The drop happens in one larger truncation event (instead of small incremental removals).

{

"event": "session.update",

"session": {

"truncation": {

"type": "retention_ratio",

"retention_ratio": 0.7,

"token_limits": {

"post_instructions": 8000

}

}

}

}This creates a more stable prefix that survives across multiple turns, reducing repeated cache busting.

Trade-off: you lose larger portions of conversation history at once. That means the model may suddenly “forget” earlier parts of the dialogue sooner than with gradual truncation. You’re effectively trading off memory depth for cache stability (more consistent prefix).

Here’s what this impact looks like in the naive per-turn truncation approach vs using retention_ratio.

Conclusion

Prompt caching is one of the highest-leverage optimizations available on the OpenAI platform. When your prefixes are stable and your routing is well-shaped, you can materially reduce both cost and latency without changing model behavior or quality.

The key ideas are simple but powerful: stabilize the prefix, monitor cached_tokens, stay under the ~15 RPM per prefix+key limit, and use prompt_cache_key thoughtfully. For higher-volume or latency-insensitive workloads, consider Flex Processing and extended retention to further improve cache locality.

Caching is best-effort and routing-aware, but when engineered intentionally, it can deliver dramatic improvements in efficiency. Treat it like any other performance system: measure first, iterate deliberately, and design your prompts with reuse in mind.